Design Assessment Methods

One goal of program assessment is to determine if students are meeting the expectations of the program. This is accomplished by gathering evidence and comparing it against targets you predetermine for your program.

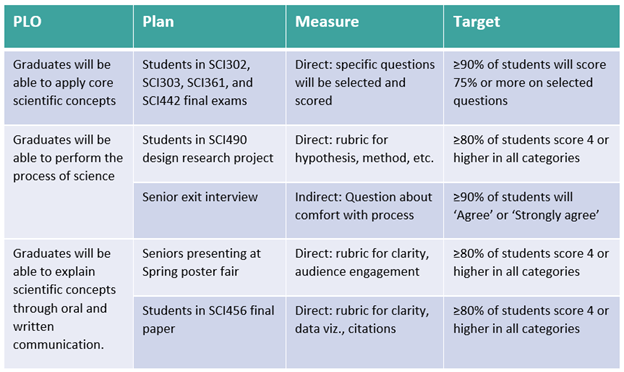

To do this you need three things: a plan (where to collect the evidence), a measure (what evidence to collect), and a performance target (how to know if students are succeeding).

Plan: where to collect evidence

An essential part of planning your assessment methods is determining which courses to use to gather evidence and which students should be included in the assessment.

Work with instructors to gather evidence

As an Assessment Leader, you should partner with instructors to collect evidence in their courses. Programs across campuses with identical PLOs can collaborate by using the same measures and then interpret their findings based on their unique locations. In some cases, these programs can collaborate in reporting as well, but you should consult your Assessment Liaison for more information.

Focus on high-level courses

At its most basic level, learning outcomes assessment determines if students have the skills and knowledge necessary for success in their discipline after completion. Therefore, it is essential to measure student learning close to the end of your program when students are expected to have gained mastery. For baccalaureate programs, this means measuring student performance in a capstone or other 400-level course. Associate programs can focus on 200-level courses. Graduate programs have prime opportunities to measure student learning in the thesis, dissertation, qualifying exam, candidacy exams, and thesis or dissertation defense. Refer to your curriculum map to determine which courses and milestones align with mastery of each PLO.

Majors vs. Non-majors

Program assessment should measure the culmination of learning after students have completed your curriculum. Therefore, you don’t want to examine non-major students who happen to be taking a course in your program. Luckily, this means you do not need to collect evidence from non-majors when conducting assessment. If you happen to collect evidence from non-majors, their evidence should be removed. Only evidence from majors in your program should be submitted in your report.

Unique Features

World Campus students: Programs with both residential and World Campus students must ensure that their assessment measures include both groups of students. The method description should make it clear that both groups are being assessed.

Certificate Programs: Often, students apply for a certificate program after completing the required courses. This can make it impossible to know which students in a given course are associated with a certificate program. When conducting assessment, if it is unclear which students are associated with your certificate program, all students in a course may be used as a proxy.

Small programs: Some programs have very few graduates from year-to-year. We have several strategies on how to gather robust evidence in this scenario on our Assessing Small Programs webpage.

Large programs: Conducting assessment in large courses with hundreds of students may place excessive burden on instructors. In those instances, programs can sample an appropriate number of students to effectively measure learning outcomes.

Measure: what evidence to collect

Each PLO must be assessed using at least one measurement, but in some cases multiple measurements are appropriate.

Direct and Indirect measures

The two basic types of measures are direct measures and indirect measures. Direct measures ask students to demonstrate their knowledge and abilities while indirect measures allow students to reflect on their knowledge and abilities or use other proxies for these. Using both types of measures provides the strongest evidence and is a best practice.

Direct measures

utilize samples of student work.

Examples include exams, papers, projects, or the thesis/dissertation defense (when scored with a rubric), and internship supervisor evaluations.

Indirect measures

utilize perceptions or other proxies.

Examples include student or alumni surveys, focus groups, and post-graduation employment.

Embedded assessments

Using embedded assessment, which is an assignment or assessment that already occurs in a course or as part of the program experience, can be advantageous. When well-aligned with the PLO, an embedded assessment can reduce (or eliminate) added work for the instructor. Because it also counts toward a grade, it can motivate students to apply their best efforts, thereby providing a more robust measure than an optional, ungraded assignment.

The problem with grades and GPA

Course curricula typically contain a variety of objectives and do not align to a single PLO. Course grades also reflect other components such as participation, attendance, or grading curves. Together this means that a final course grade will not be an accurate measure of a PLO and should not be used for program assessment. Instead, your program should develop measures that specifically align to each of your PLOs. Grade point average (GPA) compounds these issues and should not be used.

Rubrics

Most assessment measures (with the exceptions of multiple-choice or fill-in-the-blank exams or quizzes) can benefit from a rubric. A rubric is an established scoring guide that breaks down the goals of an assignment. The advantages of a rubric include clarifying goals, helping students understand expectations, and increasing score accuracy while decreasing score bias. When possible, analytic rubrics, which provide rich descriptions of each performance level for every component, should be used to increase scorer consistency and grading transparency. It is imperative that each rubric component aligns with the PLO being assessed.

For in-depth guidance on creating rubrics, one starting point is the Designing & Using Rubrics resource from the University of Michigan. According to Linda Suskie, one effective strategy is to find an existing rubric and modify it to suit your needs (Chapter 9, Assessing Student Learning: A Common Sense Guide, 2009). A good source of existing analytic-style rubrics is The Association for American Colleges and Universities (AAC&U). They gathered instructors from across the country to develop a series of rubrics addressing common learning objectives. These rubrics are freely available online.

Sample Rubrics

Use the tabs below to review sample rubrics designed for Undergraduate and Graduate programs and created by the AAC&U.

- Design Project Rubric

- Liberal Arts – Anthropology Paper Rubric

- Liberal Arts – History Paper Rubric

- Liberal Arts – Philosophy Paper Rubric

- Oral – Presentation-Rubric

- Science – Collect and Analyze Experimental Data

- Science – Design, Conduct, Application Experiments

- Science – Evaluate Models, Equations, Solutions, Claims

- Science – Multiple Representations of Information

- Anthropology-PhD Prelim Review (UMD)

- Biomedical Engineering Candidacy Written and Oral Defense Rubric (UPENN)

- Doctoral Dissertation Rubric (PDX)

- Economics Masters Research Paper Rubric (UCINN)

- Masters Capstone Project Rubric (UAKRON)

- Masters Research Paper Rubric (BU)

- Nutrition Dissertation; Proposal and Comprehensive Examination (UHAWAII)

- Oral Presentation Rubric (UNI)

- Research Paper; Lab Report; Oral Presentation Rubrics

- Thesis and Dissertation Rubric (UGA)

- Thesis Defense Rubric (Colorado Mines)

- Thesis Dissertation Proposal Rubric (URI)

The American Association of Colleges and Universities (AAC&U) is a global membership organization dedicated to advancing the vitality and democratic purposes of undergraduate liberal education. They have published 16 VALUE rubrics and are freely available to the public. Word document versions of each rubric are also available in this table.

Target: how to know if students are successful

- At least 85% of students will score 3 out of 5 or better on each of the final project rubric components related to written and oral communication.

- At least 90% will receive a score of 80 or higher on the communications section of the internship evaluation form.

Early in the assessment process identifying performance targets may feel artificial and it may take a few cycles to establish the right target. As you consider a potential target, ask yourself “Would I feel confident standing in front of a group of my peers and industry professionals defending this target as an aggregate measure of program success?” Methods should only be revised when they do not provide actionable evidence and not because your students are failing to meet the target.

Maximizing your evidence collection

- Perhaps instructors believe students are not coming into the program with the necessary background knowledge and skills. Your assessment measures can include administering a pre-test in an early course and comparing it to achievement levels in later courses.

- If instructors are interested in how students are developing throughout the program, your assessment strategy can include measures at various points throughout the curriculum. Consult your curriculum map to see which courses provide students with opportunities to Encounter, Practice, and Master your PLO of interest.

- Alternatively, instructors might want to know how demographics or experience might impact student mastery of skills and knowledge. For example, a language program might want to know if students who study abroad master learning objectives at a greater level than those who don’t. They can gather evidence on which students did and did not study aboard and compare the learning outcomes between the two groups.

The disaggregation of assessment evidence should be a routine activity. While disaggregation may raise questions of generalizability for small groups of students, it should be part of internal conversations. Aggregated evidence often masks the experiences of students on the margins and can obscure real differences in student experiences and outcomes.

How to add methods to Nuventive

Step 1: Log into Nuventive using your Penn State Access ID and password.

Step 2: Select the program you want to edit from the drop-down menu that appears when you click on the white bar at the top of the page (e.g., Program – Accounting (BS) – Harrisburg).

Step 3: Click the left-hand menu (three-line icon to the left of the program drop-down) and navigate to ASSESSMENT >> Plan and Findings.

Step 4: Double-click the PLO of interest to open it.

Step 5: Click the Assessment Method tab.

Step 6: Click the green plus sign icon at the upper right.

Note that the Method entered here should be specific about what to measure every time this PLO is assessed. This is not the place to add one-time details like dates or instructor names.

For additional details, including screenshots, refer to the Nuventive Handbook for Assessment Leaders.